The Hidden Cost of Detection Tuning

We’re going to say something you won’t hear from most cybersecurity vendors: generating more alerts doesn’t equate to better security, especially with the resource constraints facing today’s Security Operations Centers (SOCs). In the last few years, SOCs have spent concerted efforts to reduce the overall alert volumes to the actual analytical/investigation capacity of the alert. This practice is often referred to as detection tuning.

The Trade-offs of Detection Tuning

Engineers must fine-tune detection systems to manage the deluge of alerts without compromising security. This involves navigating the delicate balance between false positives and false negatives.

- False Positives: These are alerts that incorrectly flag normal activities as threats. High false positive rates lead to alert overload, where analysts cannot investigate all alerts due to capacity constraints.

- False Negatives: These occur when actual threats are missed by the detection systems, increasing the risk to the organization.

To maintain the balance between these issues, we turn to data science and the concept of a precision-recall trade-off. Precision and Recall are two metrics used to evaluate the performance of a classification model.

Precision (quantifying the number of false positives) measures the accuracy of the model’s positive predictions. It’s a ratio of true positive predictions to the total predicted positives and helps us answer the question, “Of all the instances labeled as positive, how many are actually positive?”

Recall (quantifying the number of false negatives) measures the model’s ability to identify all relevant instances within a dataset. The ratio of true positive predictions to the actual positives helps us answer the question, “Of all the actual positive instances, how many did we correctly identify as positive?”

Improving precision typically reduces Recall in the precision-recall trade-off and vice versa. There is no practical way to be perfect in both.

Security teams often have to decide on the threshold that balances the risk of missing real threats against the cost of investigating too many non-threats. Depending on an organization’s specific security needs and resources, the optimal point on the precision-recall curve may vary.

Understanding the Trade-off

PsExec is a legitimate software tool IT administrators use to execute processes remotely on other systems. Unfortunately, it’s a powerful utility that attackers can exploit to carry out malicious actions over the network.

The goal of security is to detect malicious use of PsExec without hindering the productivity of legitimate IT administrative tasks. Organizations can focus on reducing false positives and accepting the risk of false negatives to accomplish this goal.

Focusing on Reducing False Positives

To avoid overwhelming your security team with false alerts (false positives) that could lead to alert overload, you may decide to implement a rule in your detection system. This rule will ignore or exclude any PsExec activity that originates from recognized IT administrator accounts, reducing the number of alerts generated by legitimate uses of PsExec.

- Pros: This approach keeps the number of unnecessary alerts low, ensuring that security analysts can focus on more likely threats without being distracted by frequent benign alerts from known admin activities.

- Cons: The key drawback here is the potential increase in false negatives. Suppose an attacker gains access to an IT administrator’s credentials and uses PsExec maliciously. In that case, this activity might not trigger an alert because the system is configured to trust actions initiated from these accounts.

Accepting the Risk of False Negatives: By excluding PsExec activities from known IT admin accounts from triggering alerts, you accept the risk associated with false negatives. A false negative in this context means failing to detect a real security threat when an attacker uses compromised IT admin credentials to carry out actions that would normally be trusted.

- Impact: This decision directly impacts the system’s Recall. While Precision increases (fewer irrelevant alerts), Recall decreases (potential to miss actual threats).

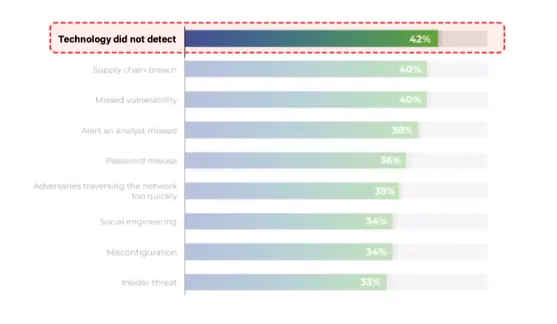

Most security teams prioritize false positive reduction because consistently overloading the team is unsustainable. There is a hidden cost to these trade-offs that is rarely discussed: The well-tuned detections in your SOCs that have allowed the team to keep their heads above the water have also eroded your detection fabric, creating a large number of gaps that attackers can slip past. This complicates the tuning process as it becomes a business decision on the risk tolerance and where it lies.

During a presentation at BSides SF this year, cybersecurity expert Caleb Sima highlighted the critical risk posed by disregarding low and medium-severity alerts. He engaged the audience with a probing question: “How many of you have thousands and thousands of alerts in your SIEM or detection and response that are medium and lows that need to be triaged? And you will not do the triage because you just don’t have the time - you don’t have the resources?”

Sima emphasized that SOCs need to view their ability to triage as a vital aspect of their overall threat coverage. He explained, “It’s highly likely that in most attacks, the event was there. It’s that no one looked further into it.” His remarks indicate that many security breaches are preventable if low and medium-severity alerts were more diligently examined and addressed. This often-overlooked aspect of SOC operations can lead to significant vulnerabilities, as potentially critical threats might go unnoticed until it is too late.

Eliminating the Trade-off

Given the inherent challenges in balancing Precision and Recall, our next step involves managing and eliminating these trade-offs. What if the limitations imposed by the current detection frameworks could be transcended? Imagine a scenario where SOCs can extend their coverage and enhance the depth of their investigations without the constant worry of resource depletion.

By leveraging advanced AI technology, we can introduce a paradigm shift in detecting and managing threats. This transition to AI-augmented security analysts marks a critical evolution, allowing SOCs to increase detection sensitivity and expand investigative capabilities without the traditional concerns of overwhelming analysts or missing subtle yet critical threats.

Let AI Agents Do the Heavy Lifting

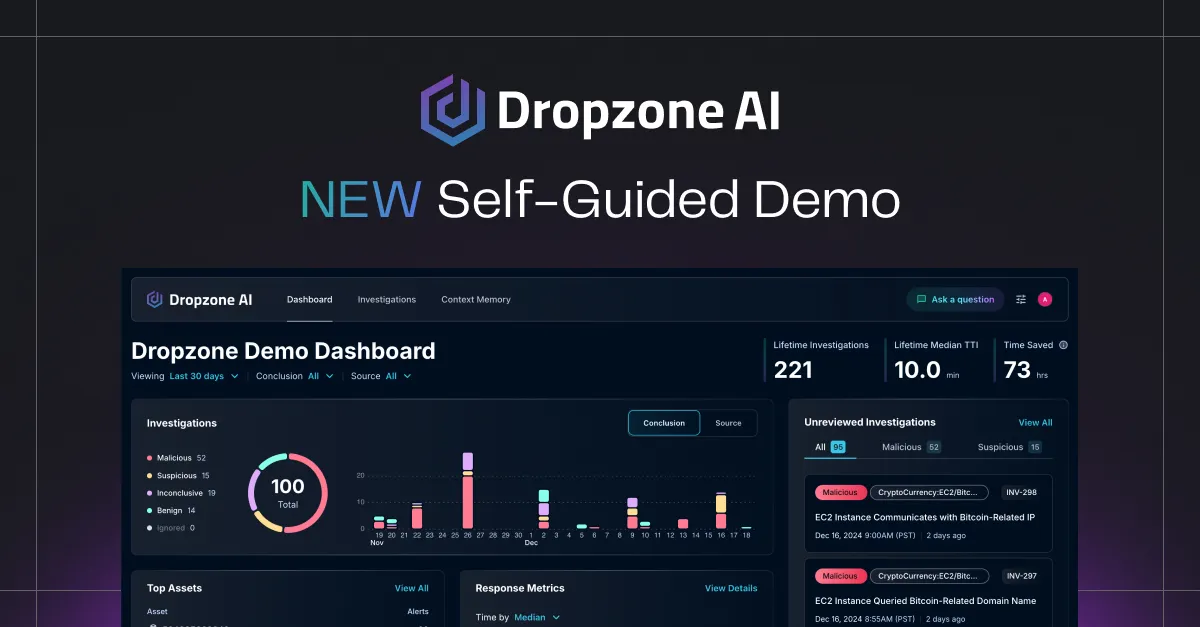

With AI-augmented security analysts, human analysts can offload most alert triage and investigation to AI agents. These AI agents are trained to replicate expert knowledge and techniques and use recursive reasoning to investigate every alert until they can reach a conclusion. They integrate with your IT and business systems to pull contextual data, refer to third-party resources, and query your existing security tools just as an expert analyst would. All their evidence is documented, and the investigation is summarized with a report.

Adding AI agents dramatically changes the detection tuning calculus and practically eliminates the need for trade-offs. Turn up the sensitivity on those detections! AI agents—who never tire—will thoroughly investigate every alert, passing on the 2-5% of alerts highlighted as true positives to their human counterparts for validation.

How Detection Tuning Changes with AI-augmented Security Analysts

None of this is to say that AI agents prevent the need for detection tuning—how tuning is done will just change. Without worrying about alert fatigue, security engineers will be able to:

- Add more experimental or test detections - Previously, security engineers may have been reluctant to deploy some detections because of the risk of too many false positives. If we look at the GitHub repo for Sigma rules, many are tagged as “experimental” or “test.” For example, these 10 detections for malicious activity within Kubernetes clusters or this detection that looks for SQL queries with 'drop', 'truncate', 'dump’, 'select \*', and other slightly suspicious commands.

- Increase sensitivity - Any rule with a threshold or parameterized conditions may be a candidate for increased sensitivity since AI agents will handle the grunt work of triaging these before they would ever reach a human. Some EDR/NDR/XDR vendors also have sensitivity settings that you could turn up safely.

- Eliminate exclusions that create blindspots - Using our example of a PsExec detection from earlier, security engineers would be able to remove exclusions without worrying about impacting the mental health of their colleagues … and they’d catch sneaky bad actors who manage to steal an IT admin’s credentials.

Make Attackers Work Harder

Savvy attackers know to hide in noisy areas or use legitimate protocols and tools to evade detection. Security engineers tuning detections face a quandary: Should they increase sensitivity to catch those edge cases at the risk of creating too many false positives? AI agents eliminate this trade-off, freeing detection engineers from worrying about alert fatigue.

With the aid of AI agents, SOCs will be able to thoroughly investigate every alert sent their way and only involve human analysts to validate accurate positive findings. Detection engineers will be free to turn up the sensitivity of their alerts, try out more experimental detections, and ultimately increase the chances of catching malicious behavior on the network. Attackers will need to work even harder to avoid getting caught.

If you’re ready to leverage the power of GenAI to reduce manual alert analysis by 95%, contact us now. If you want to learn more, we encourage you to explore our demo gallery to see how the Dropzone AI product works.