Introduction

There’s a lot of buzz about fully autonomous SOCs, with some claiming that AI and automation will completely take over security operations. But the truth is that SOCs will always need human expertise, no AI can replace skilled SOC analysts' judgment and insight. The idea of an autonomous SOC does come from a real need. SOC teams are under enormous pressure, with endless alerts and tight resources. Most struggle to keep up with their workload, so the promise of fully automating operations sounds like the perfect solution. However, the real power of AI isn’t in replacing people; it’s in making their jobs easier and more efficient.

The Promise and Limits of AI in the SOC

Transformative Potential

Automation and AI are changing how Security Operations Centers (SOCs) manage their workload, helping teams handle the growing number of alerts and increasingly sophisticated threats. These systems leverage large language models (LLMs) with recursive reasoning to analyze complex relationships and contextual patterns across data. They can synthesize findings from logs, configurations, and documentation, providing actionable insights to address gaps proactively. This speed means potential issues can be flagged quickly, giving SOC teams more time to respond effectively.

AI is also making alert investigations and exposure management much smoother. Instead of starting from scratch, analysts receive detailed reports generated by AI, complete with findings and actionable insights. These systems handle repetitive (and boring) tasks so analysts can focus on higher-priority projects.

The Reality Check

Fully autonomous SOCs are unlikely to become a reality anytime soon; there’s good reason for that. Bad actors are getting increasingly creative in finding new ways to exploit vulnerabilities no one anticipated. This means that adapting an organization’s cybersecurity posture to new threats will require the judgment and creativity of experienced human analysts. While AI is incredibly effective at analyzing large datasets and spotting patterns, it cannot fully replace the decision-making required to address complex or novel situations.

There are also risks in relying solely on AI, as even the most advanced systems require some management to reduce the risk of "hallucinated" conclusions. In Gartner’s report titled Predict 2025: There Will Never Be an Autonomous SOC, they predict that “by 2027, 30% of SOC leaders will face challenges integrating AI into production”. To address this, Gartner recommends that SOC leaders should focus on phased AI adoption. Start by integrating AI into non-critical workflows like alert triage or log analysis to build familiarity and trust within the team. Pilot these products in specific use cases, gather feedback and refine the systems before scaling to production. Regularly train staff to work alongside AI agent offerings, ensuring they can spot errors or inconsistencies and maintain control over decision-making.

Augmentation Over Replacement

Why People Matter in the SOC

Human analysts bring valuable skills to the SOC, including creative problem-solving, strategic decision-making, and understanding complex business contexts. These strengths are irreplaceable when handling nuanced scenarios and prioritizing security efforts.

However, overreliance on AI could lead to a decline in these skills, and Gartner’s autonomous SOC research paper findings support this by predicting that “by 2030, 75% of SOC teams may lose foundational analysis capabilities due to too much dependence on automation.” To avoid skill erosion, organizations should create a balanced workflow where analysts regularly practice core analysis skills, such as investigating complex incidents or building detection rules. Develop training programs that simulate real-world scenarios requiring human judgment, and pair analysts with AI agents in a way that encourages critical thinking rather than blind reliance. This helps the team stay sharp while leveraging AI for efficiency.

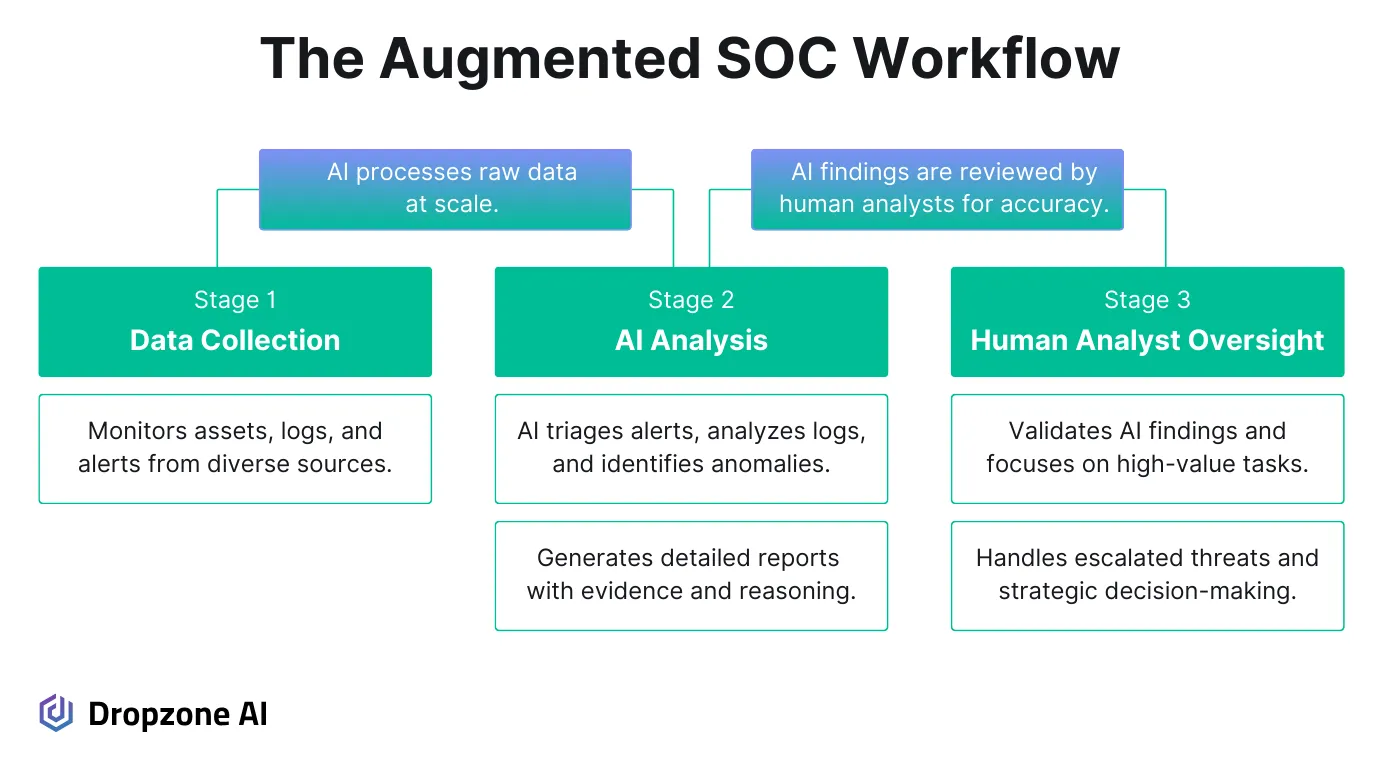

The Augmentation Model

AI agents handle repetitive tasks in the SOC, freeing analysts to focus on more important work. For instance, they can handle alert triage, gather data, and analyze logs, so analysts don’t have to spend hours sifting through raw information. Instead, they get organized findings and can focus their energy on more proactive efforts such as threat hunting, detection engineering, incident response planning, and collaborating with other teams. This augmented approach helps teams work more efficiently while reducing the stress of managing endless manual tasks.

The Gartner report advises targeting routine, repetitive tasks such as alert handling: “SOC initiatives that focus on task and workflow optimization, as opposed to end-to-end automation, realize more impactful results. It is more realistic to aim to scale SOC roles such as incident investigator in areas such as alert contextualization rather than replace the entire workflow or role.”

For AI to truly support analysts, it needs to be transparent. Agentic AI systems should show their reasoning and provide evidence for their findings so analysts can review and confirm their accuracy. These systems work best with human oversight, allowing analysts to validate outputs and make the final call. This setup improves efficiency, helps reduce burnout, and keeps analysts focused on their work's meaningful, high-impact parts.

Building the Augmented SOC

New Skills and Roles

With automation and AI playing a bigger role in SOCs, teams need to grow their skills and bring in new expertise. Hiring people with programming and code-first skills is becoming more important, as these abilities help teams work better with AI agents. Training current staff in testing, managing data, and improving processes will also make a big difference. Analysts should also learn how to fine-tune AI systems and keep human judgment part of the process.

A good way to think about AI systems is as junior team members who need mentoring and feedback to improve. Human oversight will always play a big part in ensuring AI systems perform as expected. Human analysts need to maintain their expert edge so that they can help AI agents to improve. Humans in the SOC will play more of a manager and supervisor role.

Without investing in skill-building, SOCs could face serious staffing issues. Gartner’s Autonomous SOC report additionally predicts that “by 2028, one-third of senior SOC roles could stay vacant if no focus is on upskilling teams”. To mitigate this risk, start building upskilling programs now. Offer training in programming, data management, and process optimization to prepare current staff for roles that require working with agentic AI systems.

Establish mentorship programs where experienced analysts guide junior team members in adapting to new technology. Partner with external organizations or invest in certifications to fill knowledge gaps and attract skilled candidates for senior roles.

Practical Recommendations

Measure Gains

Tracking how AI integration impacts your SOC’s operational efficiency is important. Focus on measurable improvements, such as the speed of threat detection, reduced false positives, or the time saved in triaging alerts. Rather than getting caught up in flashy promises of automation, prioritize solutions that enhance your team’s performance and make their day-to-day work more effective.

Document Processes

Automation works best when it’s built to scale existing processes rather than completely replacing them. Documenting current workflows allows teams to identify which areas can benefit most from AI integration. It’s also smart to plan for contingencies so the team can maintain operations even if an AI-powered system encounters issues or fails.

Adjust Job Descriptions

As AI agents become part of daily operations, analysts will need to take on new roles, such as reviewing and mentoring these non-human coworkers. Training analysts to evaluate AI-generated outputs and provide feedback is key to improving the performance of these offerings over time. Consider formalizing this responsibility by including AI system oversight in job descriptions and performance reviews to ensure continuous improvement.

Align AI with SOC Objectives

The organization's AI projects should align with the SOC’s detection and response priorities. Review existing AI initiatives and identify opportunities to integrate them into workflows that address current needs. For example, AI-driven detection capabilities should enhance existing processes rather than add unnecessary complexity. This alignment ensures that AI is a valuable addition to your SOC’s overall strategy.

Conclusion

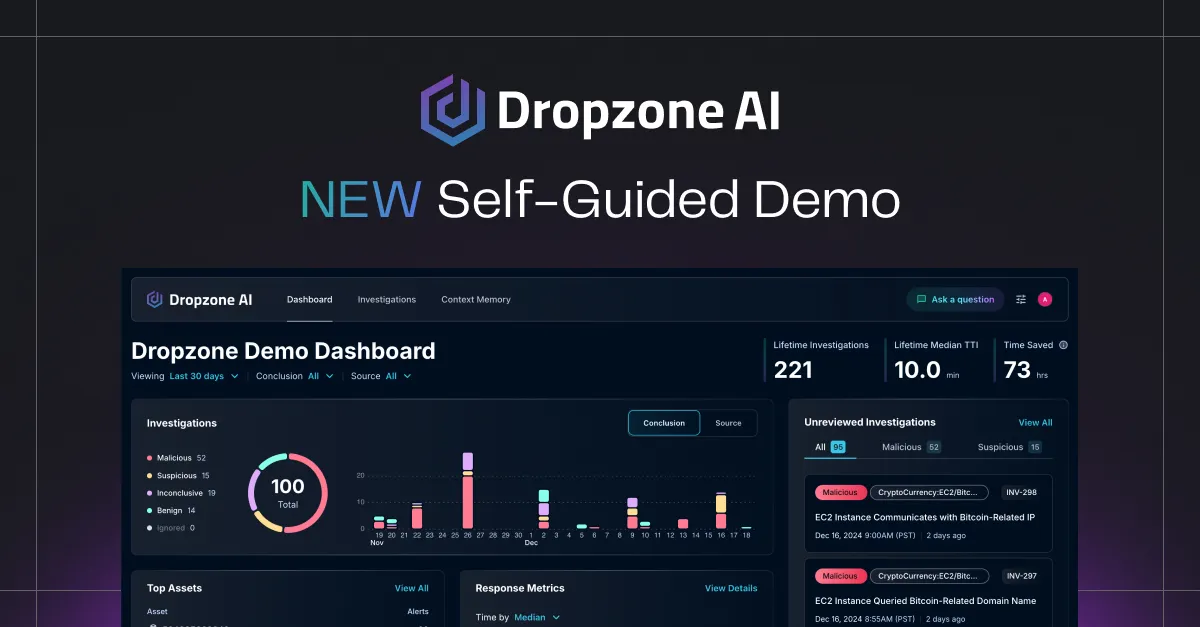

The future of SOCs lies in AI augmentation, not full automation. AI enhances operations by scaling capabilities, improving efficiency, and empowering analysts to focus on high-value tasks rather than replacing their expertise. Taking a balanced approach keeps SOCs adaptable and human-centric, ensuring they can meet evolving security challenges. Want to learn more? Explore the demo gallery to see Dropzone AI in action or download the evaluation guide to understand how Dropzone AI fits into your workflows.