AI-driven threat detection is transforming the cybersecurity landscape by enabling faster, more accurate, and more efficient identification of potential threats. Traditional methods are increasingly overwhelmed by the volume and complexity of modern cyber threats. AI technologies, however, are designed to keep pace with these challenges, leveraging advanced algorithms and continuous learning to detect threats that might otherwise go unnoticed.

This article explores AI-driven threat detection and its core technologies. We’ll also address common concerns about accuracy and transparency.

How AI Enhances Threat Detection

Traditional Security Operations Centers (SOCs) struggle to keep up with the ever-growing flood of alerts and data generated by modern IT environments. As organizations expand, their digital footprints grow, leading to an exponential increase in the volume of data and the number of potential threats. No matter how skilled, human analysts can only process so much information in a given time, making it increasingly difficult to identify and respond to all potential threats. This is where AI steps in, offering significant speed, efficiency, accuracy, and precision improvements.

Speed and Efficiency

Traditional threat detection methods are often slow and labor-intensive. Human analysts must sift through massive amounts of data, cross-referencing information from various sources to identify potential threats. This process is time-consuming and prone to errors, especially when analysts are overwhelmed by the sheer volume of alerts.

On the other hand, AI-driven systems can process data at speeds that are impossible for humans. By automating the analysis of vast datasets, AI can rapidly identify potential threats, significantly reducing response times. This is particularly important in environments where speed is critical. Every second saved in detecting and responding to a threat can mean the difference between a contained incident and a full-blown security breach.

The efficiency gains offered by AI are not limited to speed alone. AI systems can continuously monitor and analyze data, providing 24/7 vigilance without the need for breaks or downtime. This relentless monitoring ensures that no threat goes unnoticed, regardless of when it occurs.

Accuracy and Precision

Another significant advantage of AI-driven threat detection is its ability to improve the accuracy and precision of threat identification. Traditional methods often suffer from high false positive rates, where benign activities flag incorrectly as potential threats. These false positives can overwhelm security teams, leading to alert fatigue and increasing the risk that security teams will miss genuine threats.

AI addresses this issue using advanced algorithms and machine learning techniques to analyze data more accurately. Machine learning models are trained on vast datasets, allowing them to learn the difference between normal and abnormal behavior. As these models review volumes of data, they continuously refine their understanding, reducing the number of false positives and improving the overall accuracy of threat detection.

By minimizing false positives, AI systems enable security teams to focus on real threats, improving the effectiveness and efficiency of SOC operations.

Core Technologies Behind AI-Driven Threat Detection

AI-driven threat detection relies on a range of advanced technologies, each of which plays a crucial role in enhancing the effectiveness of security operations. Understanding these core technologies is essential for appreciating the full capabilities of AI-driven systems and making informed decisions about their implementation.

Machine Learning and Algorithms

Machine learning is at the heart of AI-driven threat detection. It involves training algorithms to recognize patterns and make decisions based on data. Different types of machine learning models are used in threat detection, each with strengths and weaknesses.

- Supervised Learning: Models train on labeled datasets where the outcomes are known. This type of learning is effective for identifying known threats but may struggle with novel attacks that differ significantly from the training data.

- Unsupervised Learning: Models train on unlabeled data, allowing them to identify patterns and anomalies without prior knowledge of what constitutes a threat. These models are excellent for detecting new and emerging threats that do not match known patterns.

- Reinforcement Learning: Models train to make decisions based on trial and error, receiving feedback on their actions. This approach is best for developing adaptive security measures that can respond to changing threat landscapes.

Each of these models offers unique benefits and challenges. Supervised learning is highly accurate for known threats but may struggle with new attack vectors. Unsupervised learning excels at detecting unknown threats but may produce more false positives. Reinforcement learning offers adaptability but requires significant computational resources.

Understanding the different types of machine learning models and their applications in threat detection allows organizations to choose the right tools for their specific needs.

Pattern Recognition and Anomaly Detection

One of the most powerful capabilities of AI-driven threat detection is its ability to recognize patterns and detect anomalies. Even with the best training, human operators may miss subtle patterns in data that indicate a threat. AI, however, can analyze vast amounts of data, identifying correlations and patterns that would be impossible for humans to detect.

Pattern recognition involves analyzing data to identify sequences or trends indicative of malicious activity. For example, an AI system might detect a pattern of failed login attempts followed by a successful login from a different location, signaling a potential account compromise.

Anomaly detection, on the other hand, focuses on identifying deviations from normal behavior. AI systems can establish a baseline of normal activity for a user or system and then detect when behavior deviates from this baseline. For example, if a user who typically logs in from a single location suddenly logs in from multiple locations quickly, the AI system might flag this as suspicious.

By combining pattern recognition and anomaly detection, AI-driven systems can identify a wide range of threats, from known attack vectors to new and emerging threats that have not been seen before.

Integration with Other Security Technologies

AI-driven threat detection does not operate in isolation. To be effective, it must integrate seamlessly with existing security infrastructure, including Security Information and Event Management (SIEM) systems, firewalls, Endpoint Detection and Response (EDR) platforms, and other security tools.

Integration is essential for ensuring comprehensive security coverage. AI systems must be able to access data from all relevant sources, analyze it in real time, and communicate their findings to other security tools. This requires high compatibility and interoperability between AI-driven systems and existing security technologies.

When properly integrated, AI-driven threat detection can enhance the capabilities of existing security tools, providing deeper insights and more accurate threat identification. For example, an AI system integrated with a SIEM platform can analyze data from multiple sources, identifying correlations that the SIEM might have missed. Similarly, integrating AI with EDR platforms can enhance endpoint security by providing more accurate threat detection and faster response times.

Addressing Common Concerns: Accuracy and Model Transparency

While the benefits of AI-driven threat detection are clear, many organizations have concerns about the accuracy and transparency of AI models. These concerns are understandable, given accuracy's critical role in cybersecurity and the complexity of AI systems.

Ensuring Accuracy in AI Models

One of the most common concerns about AI-driven threat detection is whether AI systems can be trusted to detect threats accurately. Skepticism about AI's accuracy often stems from the complexity of the models and the perception that they are “black boxes” that operate without clear oversight.

To ensure accuracy, AI systems are trained and validated using rigorous processes. During the training phase, models are exposed to vast datasets that include examples of normal and malicious activity. The models learn to distinguish between benign and harmful behavior by analyzing these datasets.

Validation is a critical step in ensuring that AI models perform as expected in real-world scenarios. During validation, models are tested on new data they have not seen before, allowing developers to assess their accuracy and identify areas for improvement.

Continuous learning is another important factor in maintaining accuracy. AI models are not static; they continuously learn from new data, refining their detection capabilities over time. This allows them to adapt to emerging threats, ensuring they remain effective even as the threat landscape evolves.

Transparency in AI Operations

Transparency is another major concern for organizations considering AI-driven threat detection. The complexity of AI models can make understanding how they make decisions difficult, leading to concerns about accountability and trust.

AI systems need to be as transparent as possible to address these concerns. This means clearly explaining how models are trained, how they make decisions, and how they are validated. Organizations should have access to detailed documentation and reports explaining the AI system’s inner workings, allowing them to assess its reliability and effectiveness.

Transparency also involves providing organizations with the tools to monitor and audit AI systems. This includes reviewing decision-making processes, analyzing model performance, and adjusting settings as needed. By offering these capabilities, AI providers can help organizations build trust in their systems and ensure they are used effectively.

The Future of Threat Detection with AI

As AI and machine learning evolve, their role in threat detection will only grow more significant. While AI-driven systems offer unparalleled speed, accuracy, and efficiency, human expertise remains essential in interpreting complex threats and making critical decisions. AI enhances human capabilities but does not replace them.

Looking ahead, we can expect AI-driven threat detection to become even more sophisticated, with advancements in machine learning, pattern recognition, and integration with emerging technologies like quantum computing and blockchain. These developments will further enhance the capabilities of SOCs, enabling them to stay ahead of the evolving threat landscape.

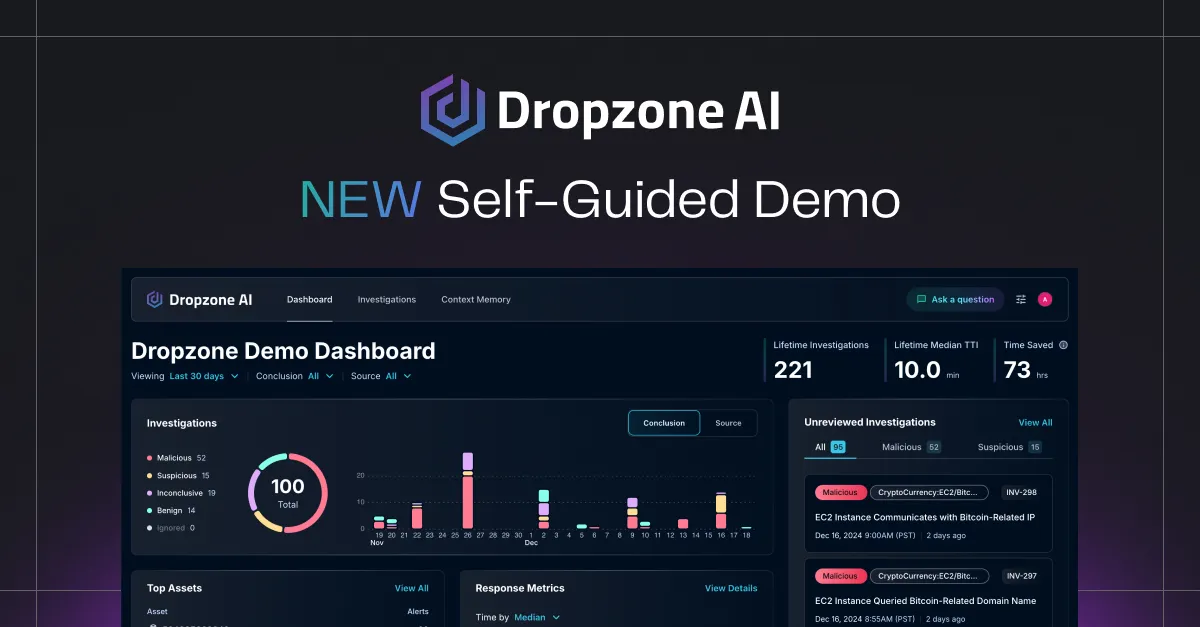

Besides detecting threats, AI is also important for investigating the alerts generated by threat detection tools. This is what Dropzone's AI SOC analyst does, using agentic AI technology to automate the triage and investigation of Tier 1 alerts so that human analysts can focus on tasks that require human intelligence. If you’re ready to explore AI’s potential in your SOC, we invite you to learn more about Dropzone AI.

Schedule a demo today to see Dropzone AI in action and discover how it can improve your cybersecurity.